Research

MIMOSA articulates its research along 5 interdependent Work Packages (WP) transversely address the 4 challenges (C).

Project Objective

Define a methodology to extract interpretable, accurate, and ethically-responsible predictive models for decision-support systems.

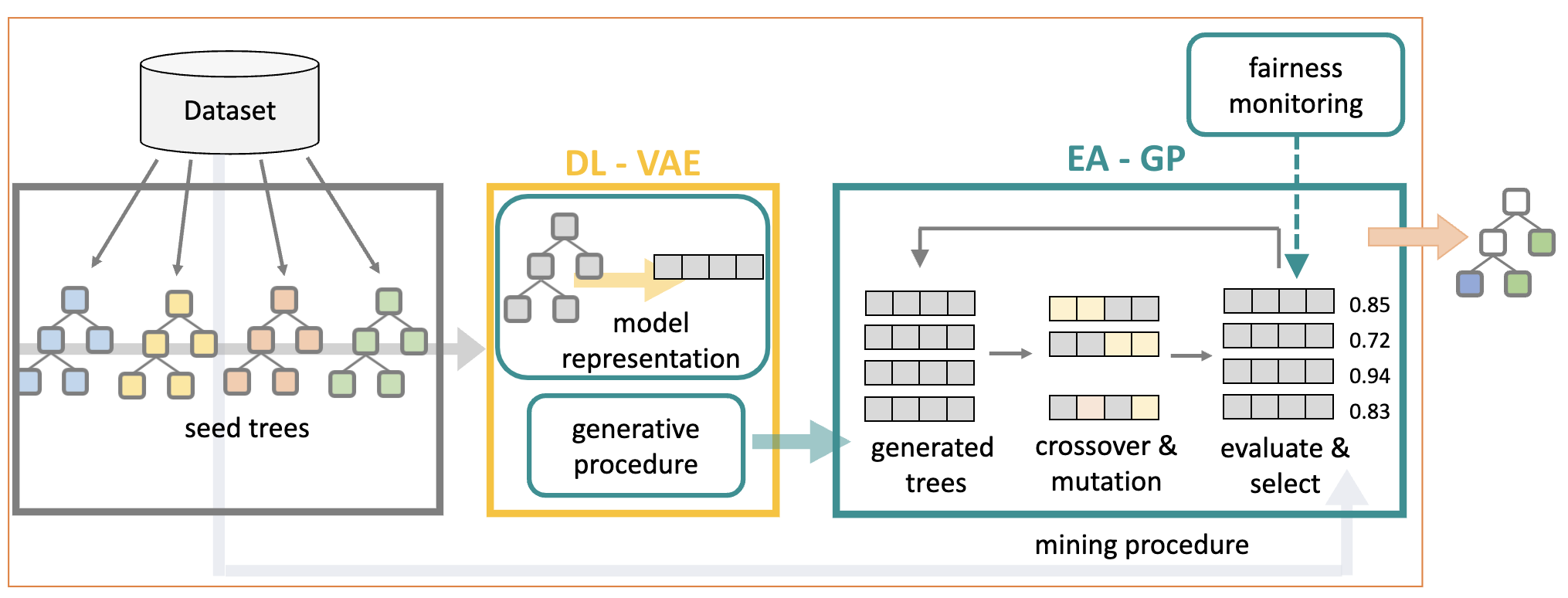

Figure 1: MIMOSA's Workflow

Scientific Contributions

Design of a methodological framework for generating and Mining Interpretable Models explOiting Sophisticated incomprehensible Algorithms.

Theoretical:

- define prototypical interpretable models

- formalize manipulable model representations

- pioneer model generation procedures

- ethical-by-design model mining procedures

Methodological:

- cognitive evaluation of interpretable models

- implementation of model mining procedures

- estimate trust in AI systems

Practical:

- identify best procedures for problem settings

- guidelines to obtain compliant/reliable models

- open-source proof-of-concept system

Social:

- bridge gap between AI and cognitive sciences

- advance strategic objectives of the EU

Breakthrough

The MIMOSA project introduces a paradigm shift from “data mining” to “model mining” that tries to revolutionize the theory and practice of AI and to establish an alignment between human reasoning and the logic of machines.

The ambition of MIMOSA is to provide progress in AI by developing a family of processes to extract interpretable and high-performing models using sophisticated algorithms. The outcomes will form significant scientific achievements as they will bring a comprehensive understanding to current AI practice and will foster human-machine interaction. These outcomes will start a new paradigm for designing decision systems that would be easily aligned with human values.

Research Lines

- C1 Identify suitable interpretable models for various problems and data types

- WP1 Definition of Interpretable Models [WP1 is dedicated to the theoretical and empirical characterization of possible interpretable models depending on the problem setting and data type]

- C2 Define adequate model representations and model generative approaches

- WP2 Design of Methods for Mining Interpretable Models [WP2 focuses on the definition of approaches for mining interpretable models depending on various sophisticated algorithms]

- C3 Handle the respectfulness of ethical properties

- WP3 Refinement of Models with Ethical Properties [WP3 aims at refining interpretable-by-design models to account for desired additional]

- C4 Demonstrate the effectiveness of the interpretable models generated properties such as fairness, privacy, causality, etc.

- WP4 Real Case Studies [WP4 tests the effectiveness of the approaches developed in real case studies]

- WP5 Experimental Evaluation, Software Development, Dissemination [WP5 describes the evaluation, software development, and dissemination strategy]

Scientific Board

Acks

This project is founded by the Italian Project Fondo Italiano per la Scienza FIS00001966 MIMOSA

Principal Investigator (PI): Riccardo Guidotti

Host Institution: University of Pisa, Italy

Project duration in months: 60 (25/03/2024 - 24/03/2029)